Instead of working with the hours or days delay that comes with batch processing, decision making can happen in milliseconds.

Materialize is a streaming database SQL database company that wants to make it easier for established companies to implement real-time data analysis.

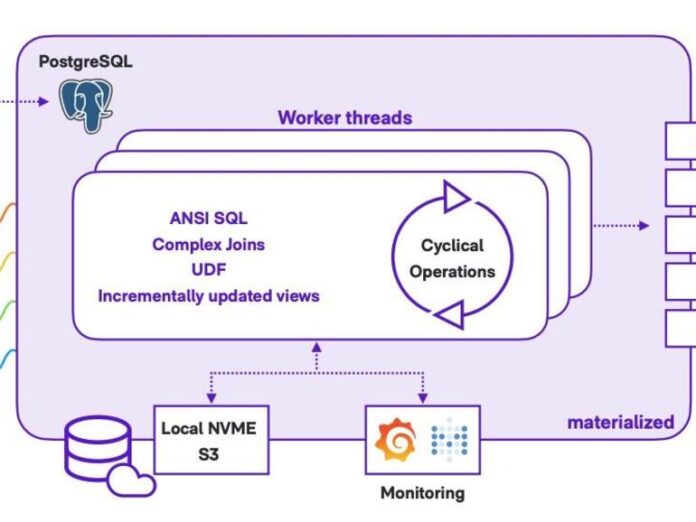

Image: Materialize

Just as an unexamined life is not worth living, unanalyzed data is not worth having. Acting on data coming in from sensors, Internet of things installations, 5G connectivity, and other sources is key to a positive ROI of digital transformation investments. Acting quickly on this river of data means analyzing it quickly. That’s where data streaming analysis comes in.

Instead of working with the hours or days delay that comes with batch processing, real-time analysis enables decision making to happen in milliseconds.

Paige Bartley, a senior research analyst, data management at 451 Research, which is part of S&P Global Market Intelligence, said streaming data analysis provides a living pulse of a company and its customers in ways that traditional processes, such as batch processing, cannot.

“There are many contemporary use cases in which stale or outdated data is as good as no data at all,” she said. “Those use cases are often candidates for streaming data integration and analysis, and they can be powerful drivers of digital transformation.”

Gartner predicts that by 2022 most major new business systems will use streaming data to deliver personalized experiences, spot fraud, build predictive AI, or discover new business opportunities.

Arjun Narayan, the founder and CEO of the streaming SQL database company Materialize, said that one barrier to adoption of streaming data analysis is the need to rearchitect data management infrastructure.

SEE: Humana uses Azure and Kafka to bring streaming data analysis to healthcare (TechRepublic)

Companies such as Uber and Lyft built their businesses around the streaming model. More traditional companies have to retrofit data management systems built around batch processing that takes place once a day or even more infrequently.

“Every business needs to move to real time where all this data is flowing in and being analyzed within milliseconds,” he said.

Materialize is the first standard SQL interface for streaming data. Engineers can use it to build complex queries and multiway joins without specialized skills or microservices. Materialize computes and incrementally maintains data as it is generated so query results are accessible immediately as needed.

Narayan’s goal with Materialize is to make streaming data analysis as easy to use as a batch processing system. To accomplish that, he built a solution around SQL to take advantage of the large existing user base.

“The same skills people have been using for 30 years, now they work on streaming data too,” he said.

Materialize combines data streams from multiple sources and provides a SQL query engine to run on top of streaming data feeds. Confluent and Databricks have similar products. Narayan said that many customers use Kafka to get data into Materialize.

Bartley said organizations routinely report real-time data integration as one of the top challenges faced in their analytics programs.

“Organizations now realize that they cannot assure high quality data for real-time or near-real time analysis without robust and consistent real-time data integration,” she said.

Another advantage of using SQL to analyze streaming data is that companies can use existing business intelligence tools.

SEE: Report: SMB’s unprepared to tackle data privacy (TechRepublic Premium)

Bartley said the barriers to achieving real-time data integration include complex architecture, legacy systems, a shifting product landscape, cultural challenges, and even defining relevant use cases.

“Streaming data management and streaming data analysis represent a fundamental shift from traditional data analysis performed on data ‘at rest,’ and skills shortages are common,” she said.

Streaming data analysis allows companies to conduct more complicated analysis in real time, such as recommending accessories to a shopper buying a gaming console or flagging a transaction as fraudulent within 100 milliseconds. For banks dealing with fraud, it’s cheaper to deny a transaction in real time than to reverse the charges the next day.

“If you wait to process data the next day, more than a few opportunities are lost,” he said.

In addition to requiring a near-immediate turnaround, these decisions require a lot more data to power the analysis.

Gartner predicts that 75% of enterprises will shift from testing AI to operationalizing it by the end of 2024, which will drive a 5X increase in streaming data and analytics infrastructures.

Streaming data analysis also can help companies manage the increased volume of data coming from sensors and other new sources.

“Streaming tech can do meaningful processing on that explosion of data with low latencies,” Narayan said.

Narayan said he thinks batch processing is becoming a thing of the past with most new data analysis use cases taking a streaming-first approach. Batch processing will be used only for legacy data pipelines.

Materialize announced this week that it raised $40 million to hire more engineers and extend product rollout.

Bucky Moore, a partner at Kleiner Perkins who led the current funding round, said that Materialize has been studying this problem for a decade and has built a unique approach.

“Every business is going to be a real-time business within the next few years, but current approaches require compromises between cost, speed and skills to get there,” he said.

Narayan said he will double the size of the company’s engineering team over the next year and add new roles in other departments as well.